Enabling Next-gen Automation with Multimodal Cognitive Perception

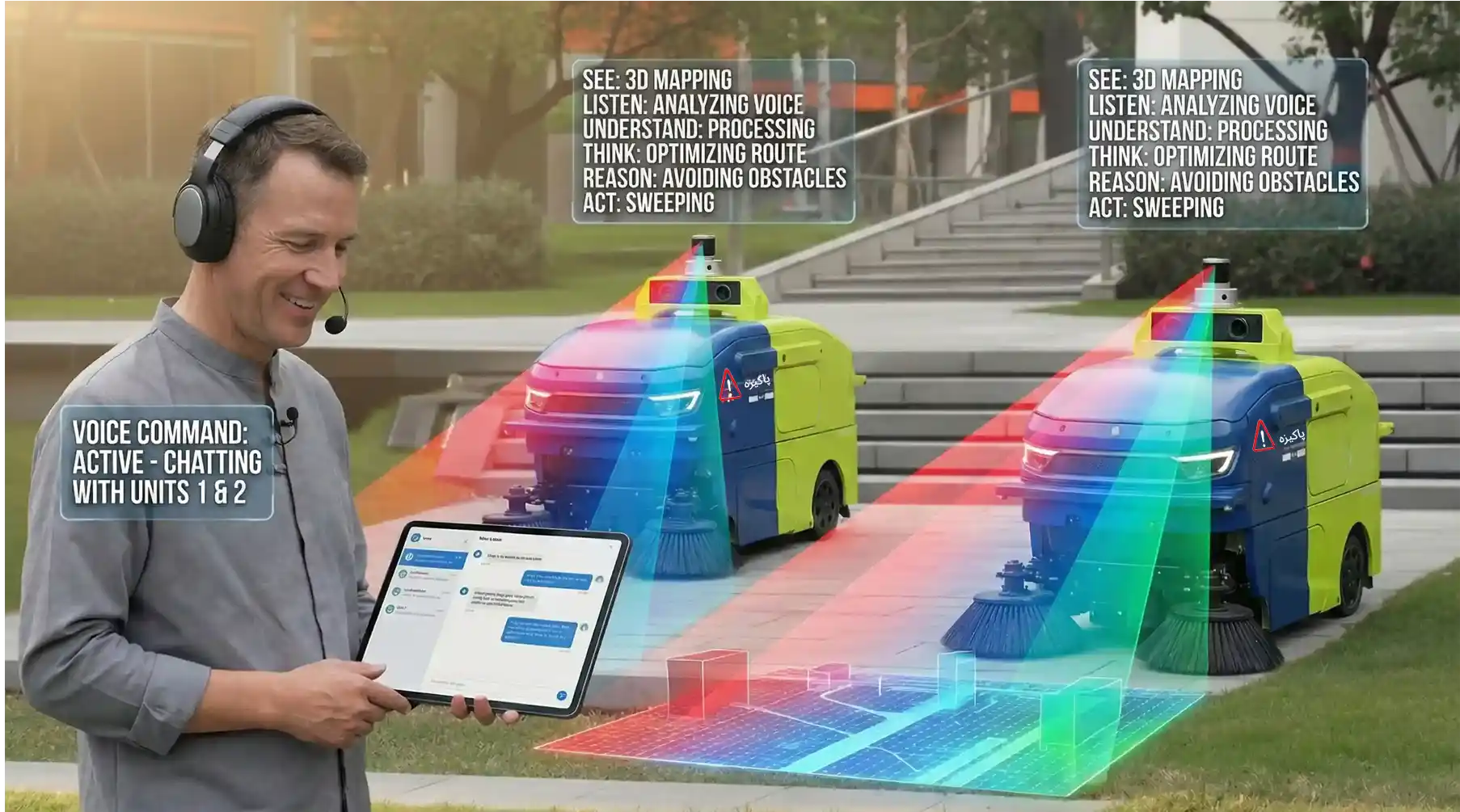

to See, Listen, Speak,

Think, Reason & Act

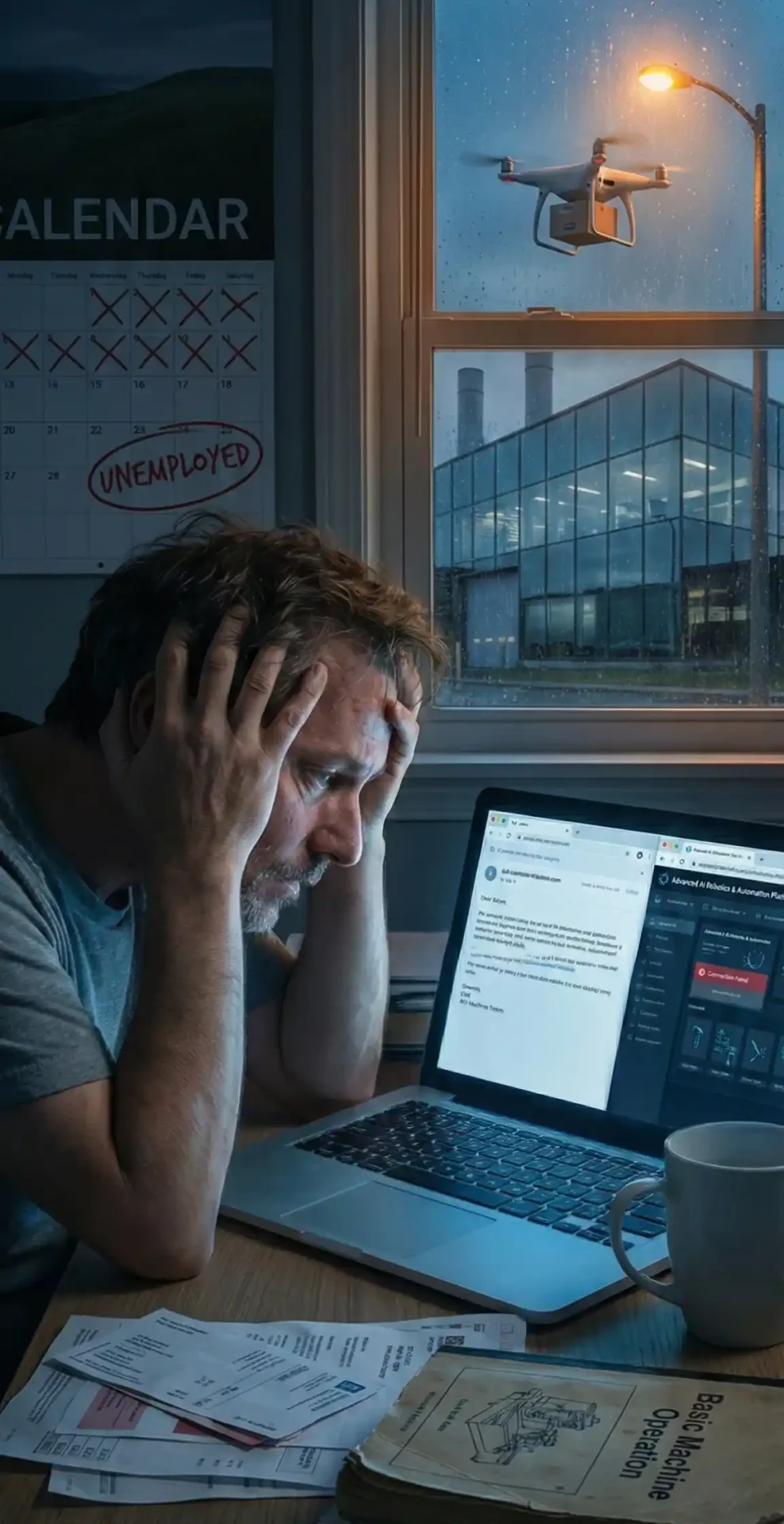

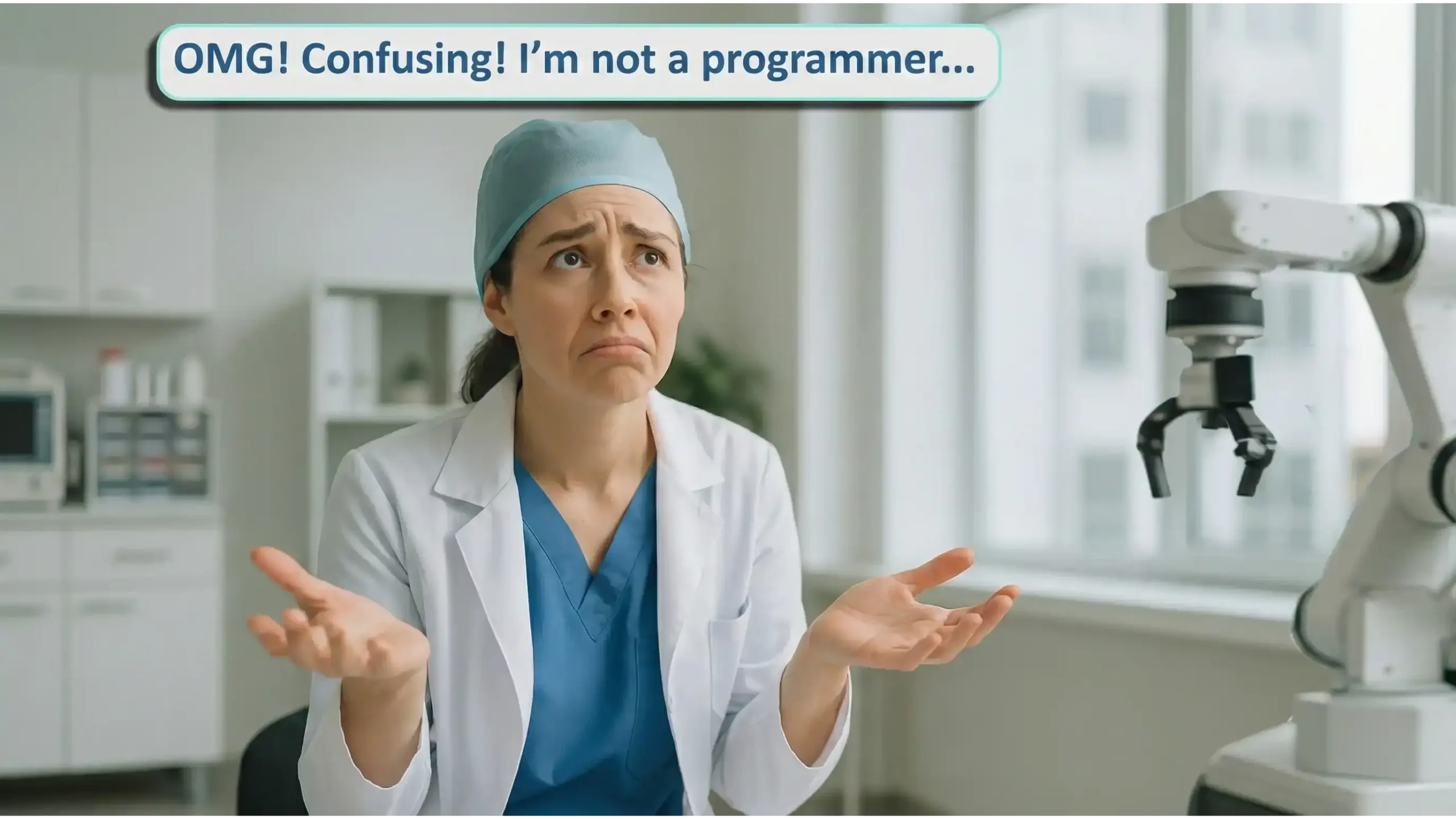

Are you among the 99.8% standing outside the world of physical automation, uncertain how to connect with next-gen intelligent machines and worried it’s speeding toward a future you can’t join?

Are you among the 99.8% standing outside the world of physical automation, uncertain how to connect with next-gen intelligent machines and worried it’s speeding toward a future you can’t join?

Enabling Stem Cells of Automation

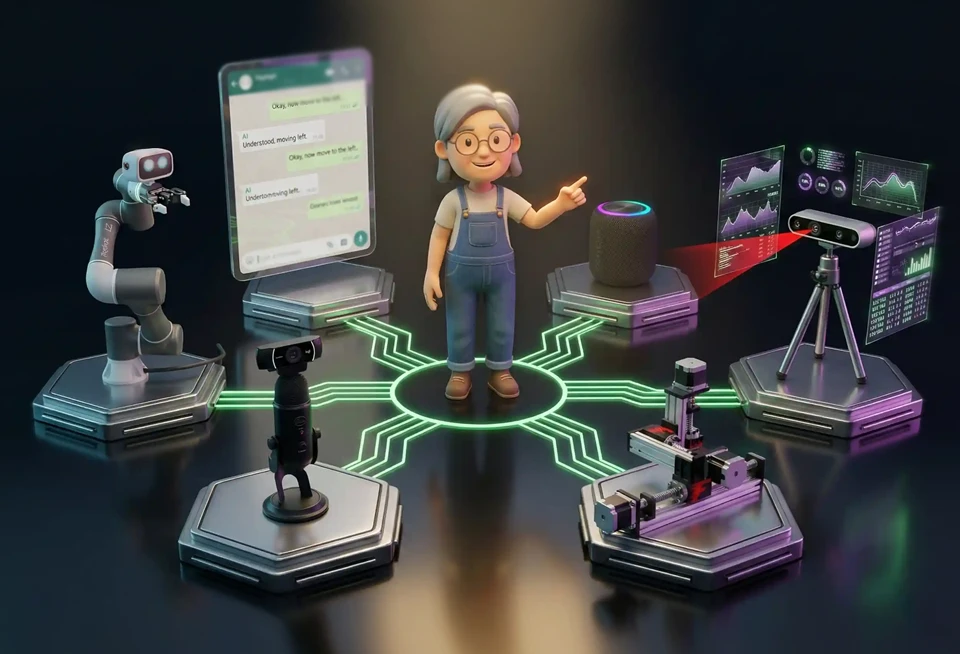

AI-driven ACTUATORS to See, Listen, Speak, Think, Reason & Act

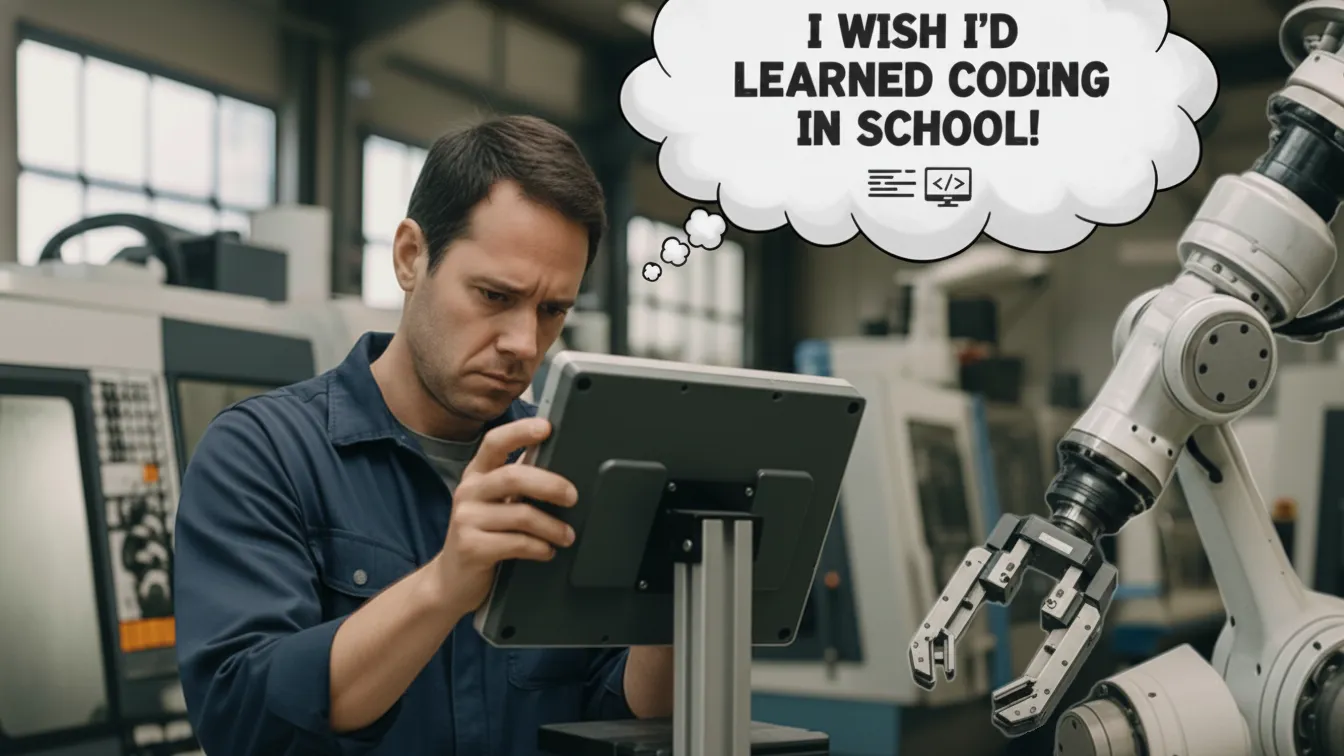

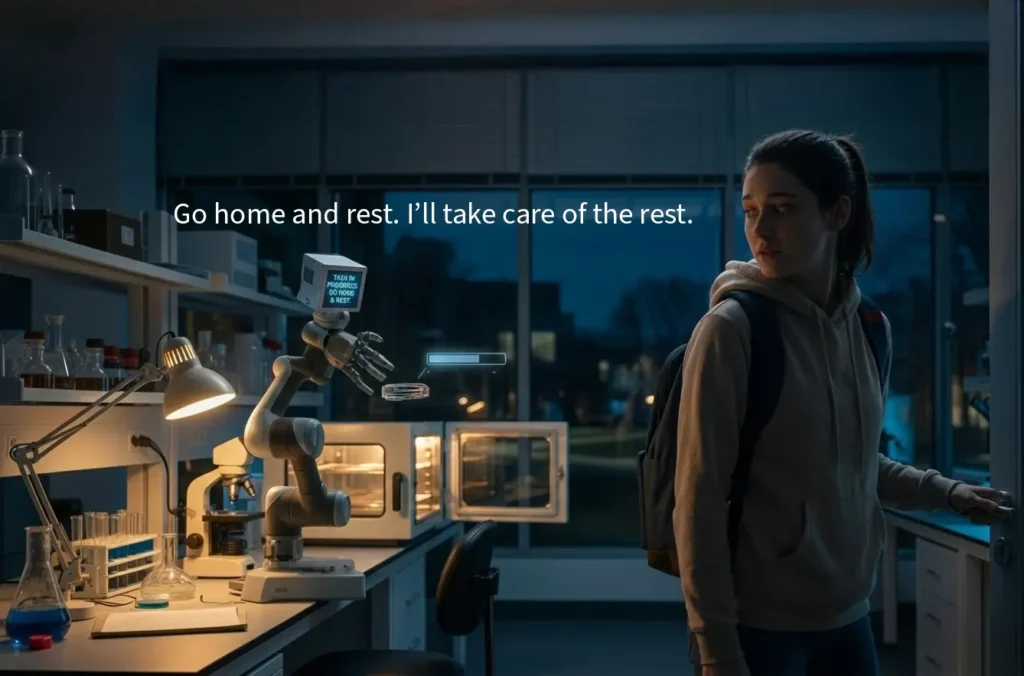

As AI and automation accelerate, millions of workers face the real threat of job loss. The growing human-machine communication & supervisory gap is preventing people from adapting, keeping their roles, or transitioning into the new automation-driven workforce. But can we force everyone to learn AI, automation, vibe coding & mechatronics?

Explore More

Over 90% of people are not able to program, control, or run modern intelligent machines known as "physical automation".

Many can only use basic AI for image or video creation, text editing or developing software codes using AI assistants.

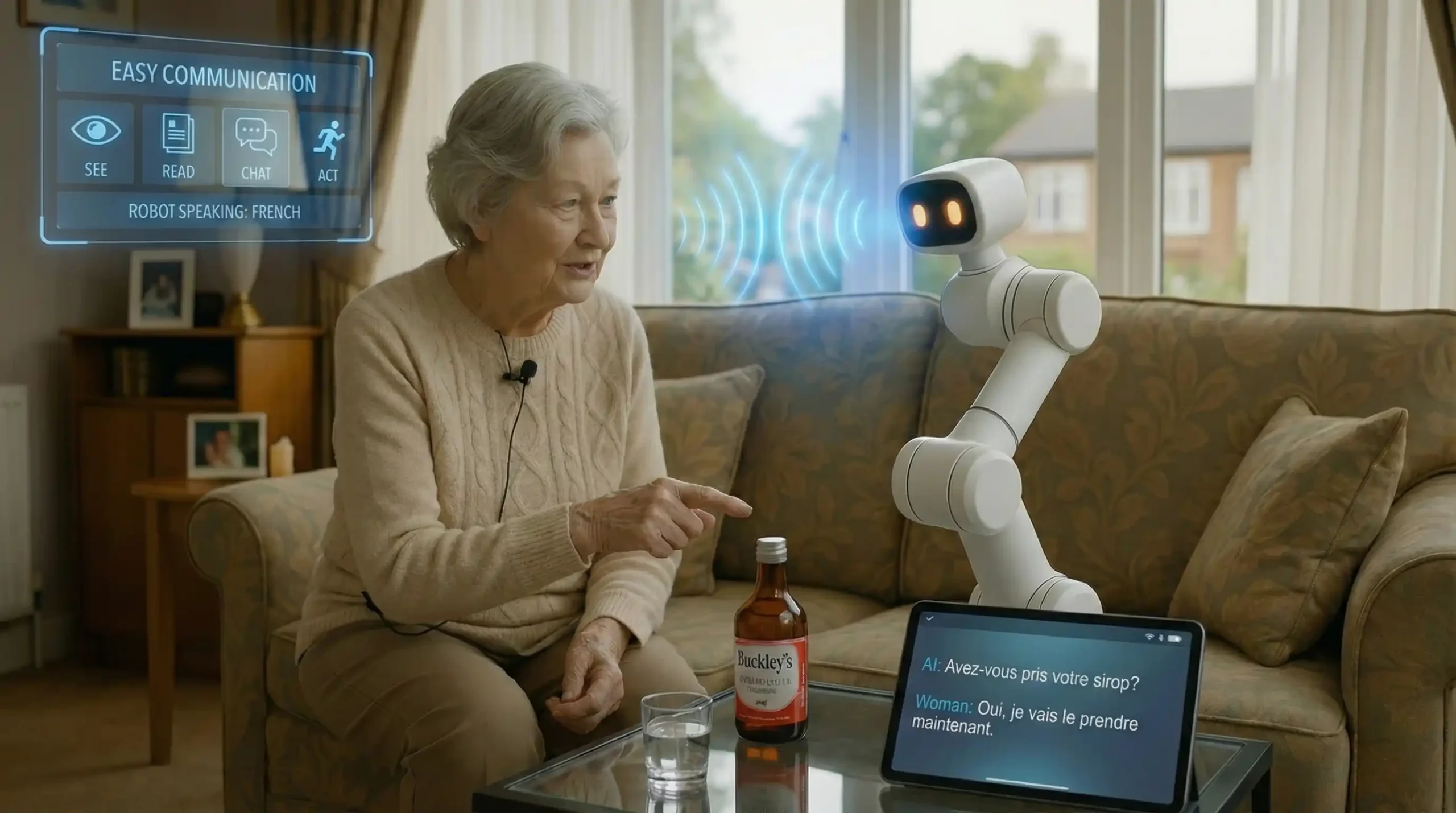

This burden is especially heavy for non-technical individuals and older adults who struggle to keep pace with fast-changing tools.

We address a growing challenge caused by the rapid acceleration of AI, automation, and robotics.

Get in Touch

AI Automation Designed

to EMPOWER

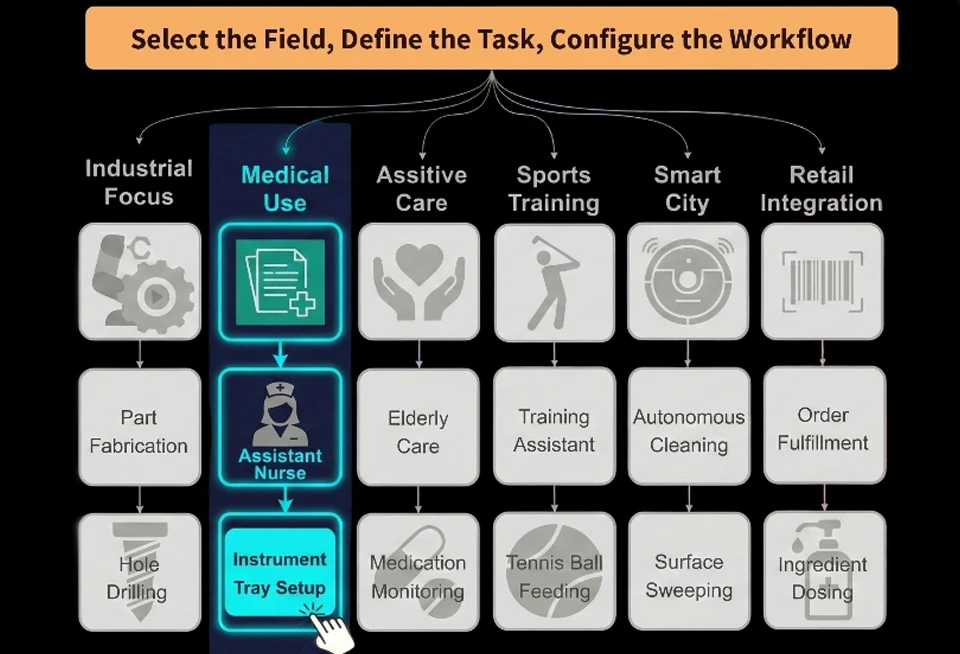

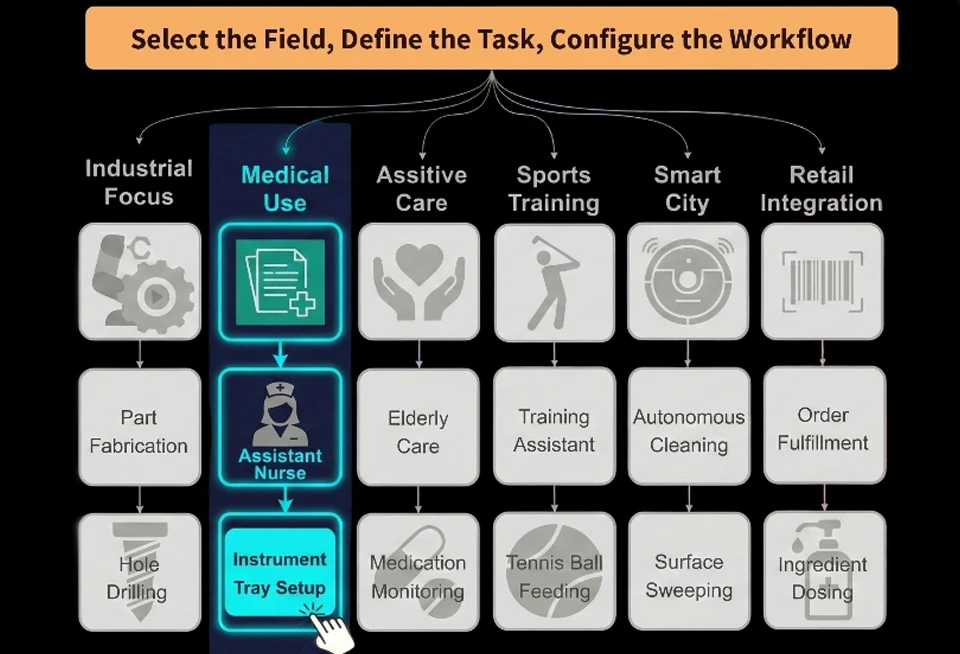

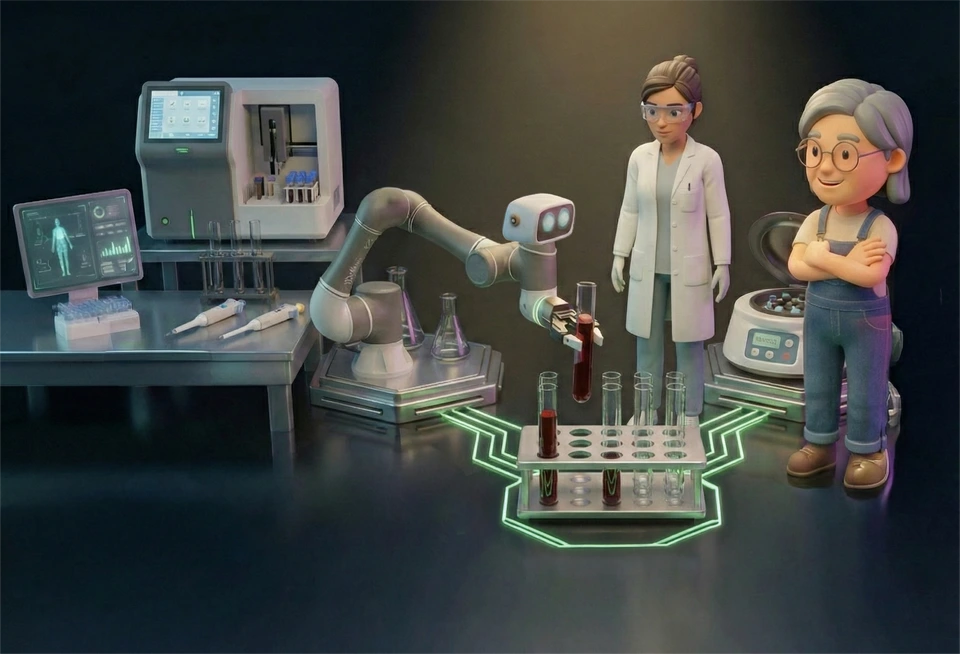

Advancing research and education through deep learning, VLA models, and intuitive no-code automation.

Advancing research and education through deep learning, VLA models, and intuitive no-code automation.

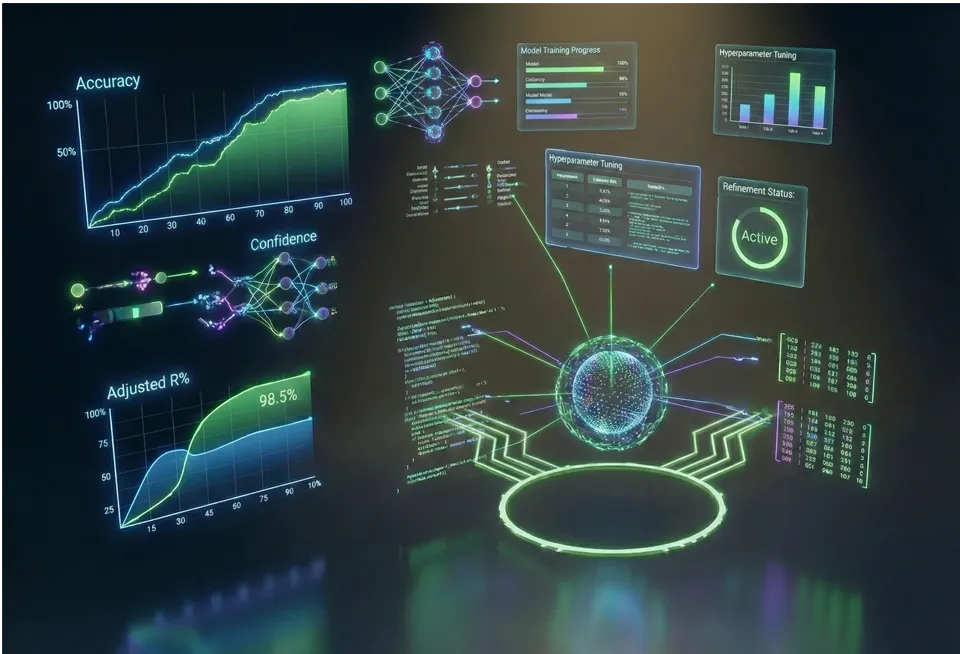

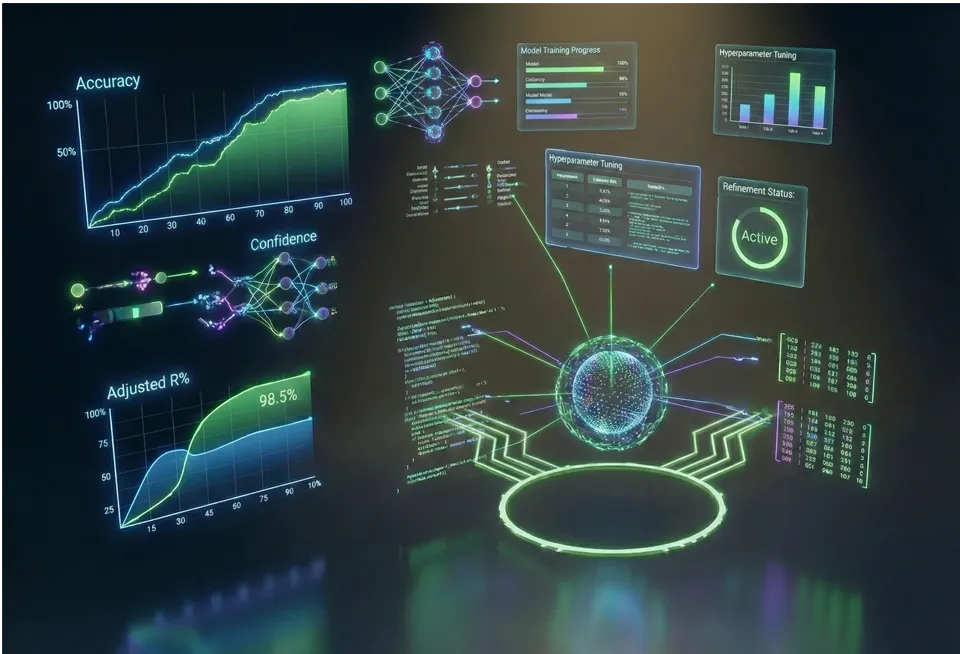

Developing custom machine learning models and algorithms to enable automation, pattern recognition, and predictive analytics.

Developing custom machine learning models and algorithms to enable automation, pattern recognition, and predictive analytics.

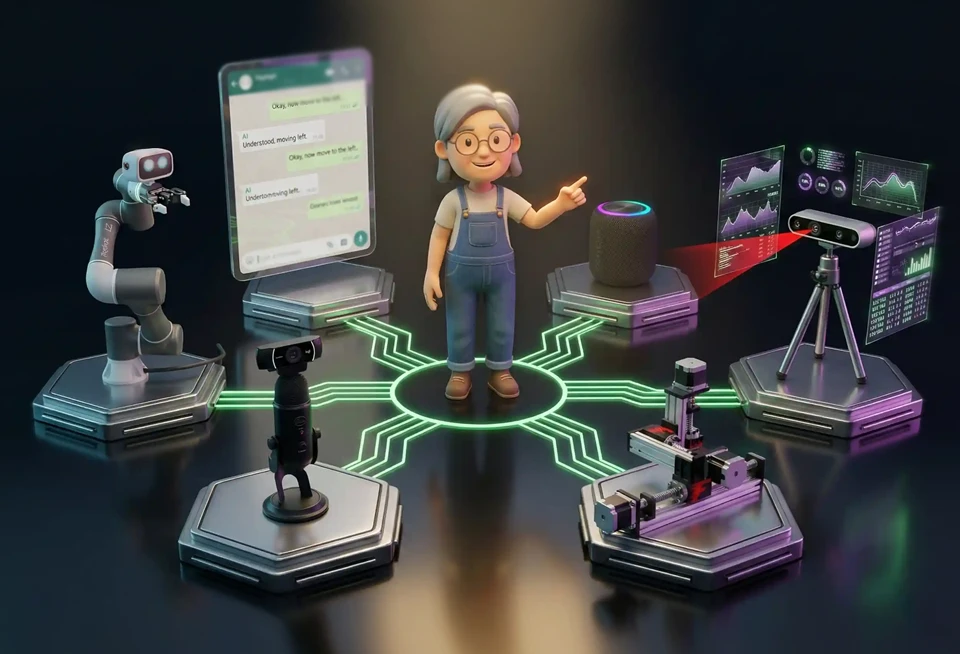

Implementing software robots to automate repetitive and rule-based tasks, improving efficiency and accuracy.

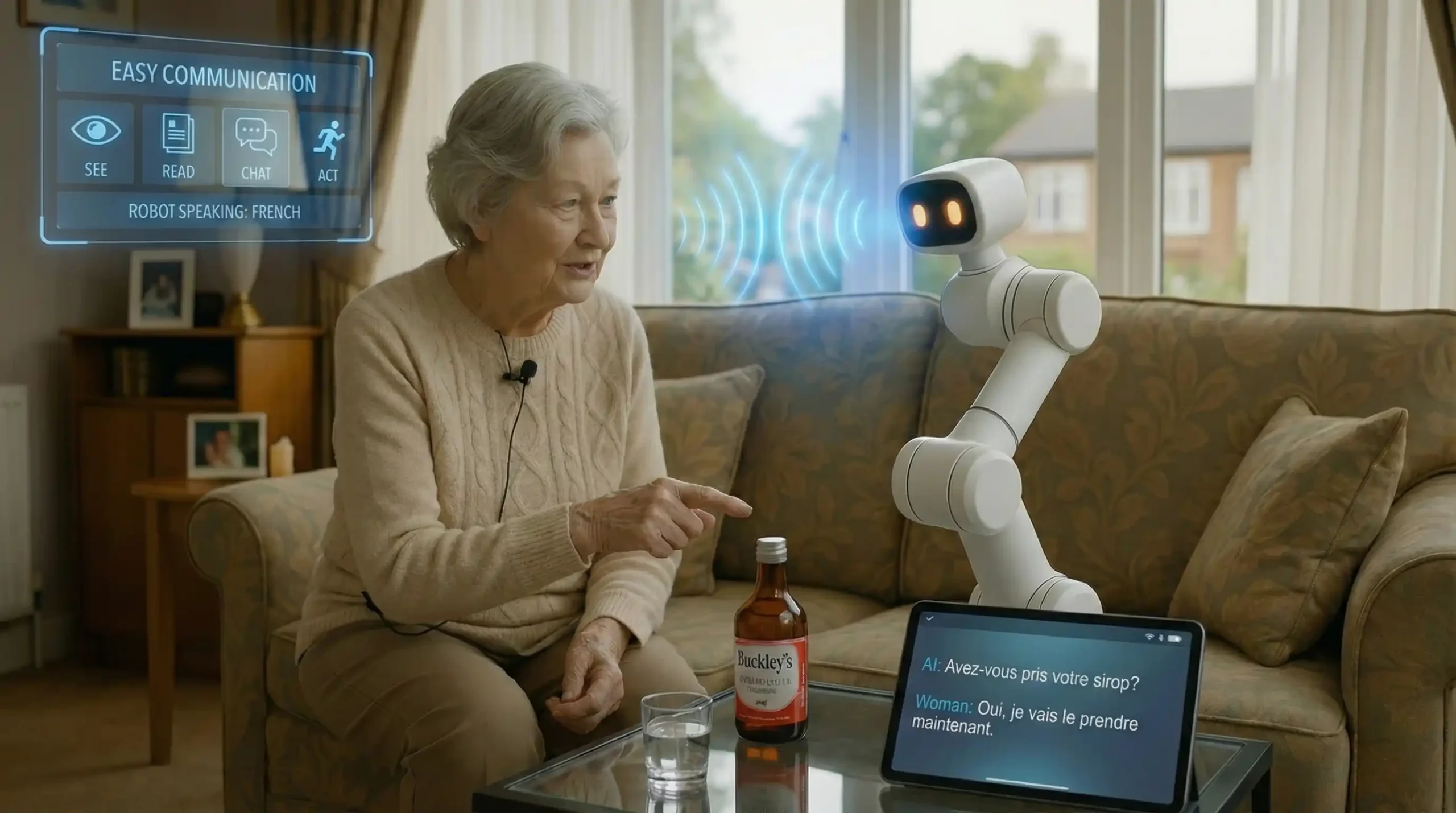

Creating intelligent chatbot systems or virtual assistants that can understand and respond to user queries, improving customer service and support.

Creating intelligent chatbot systems or virtual assistants that can understand and respond to user queries, improving customer service and support.

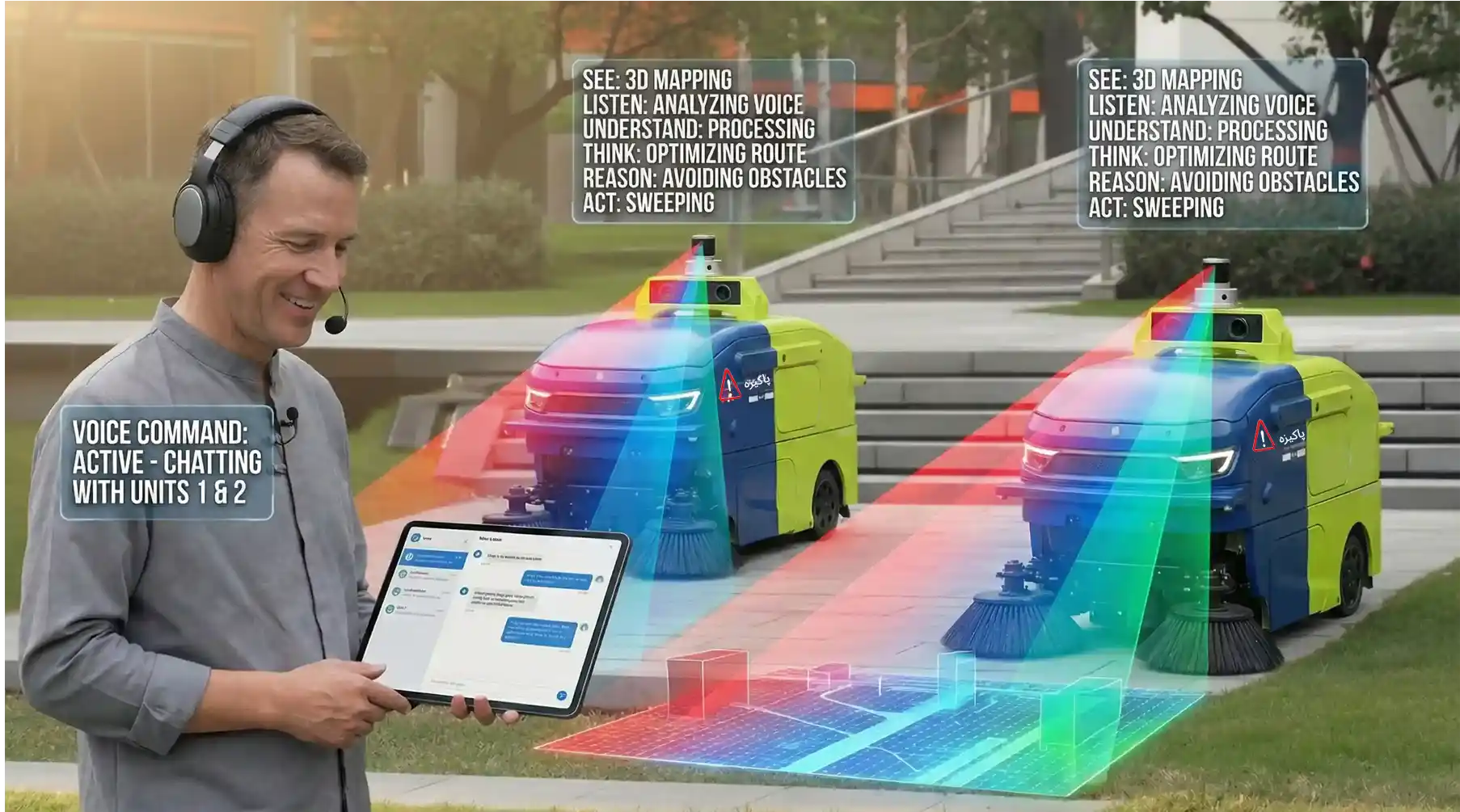

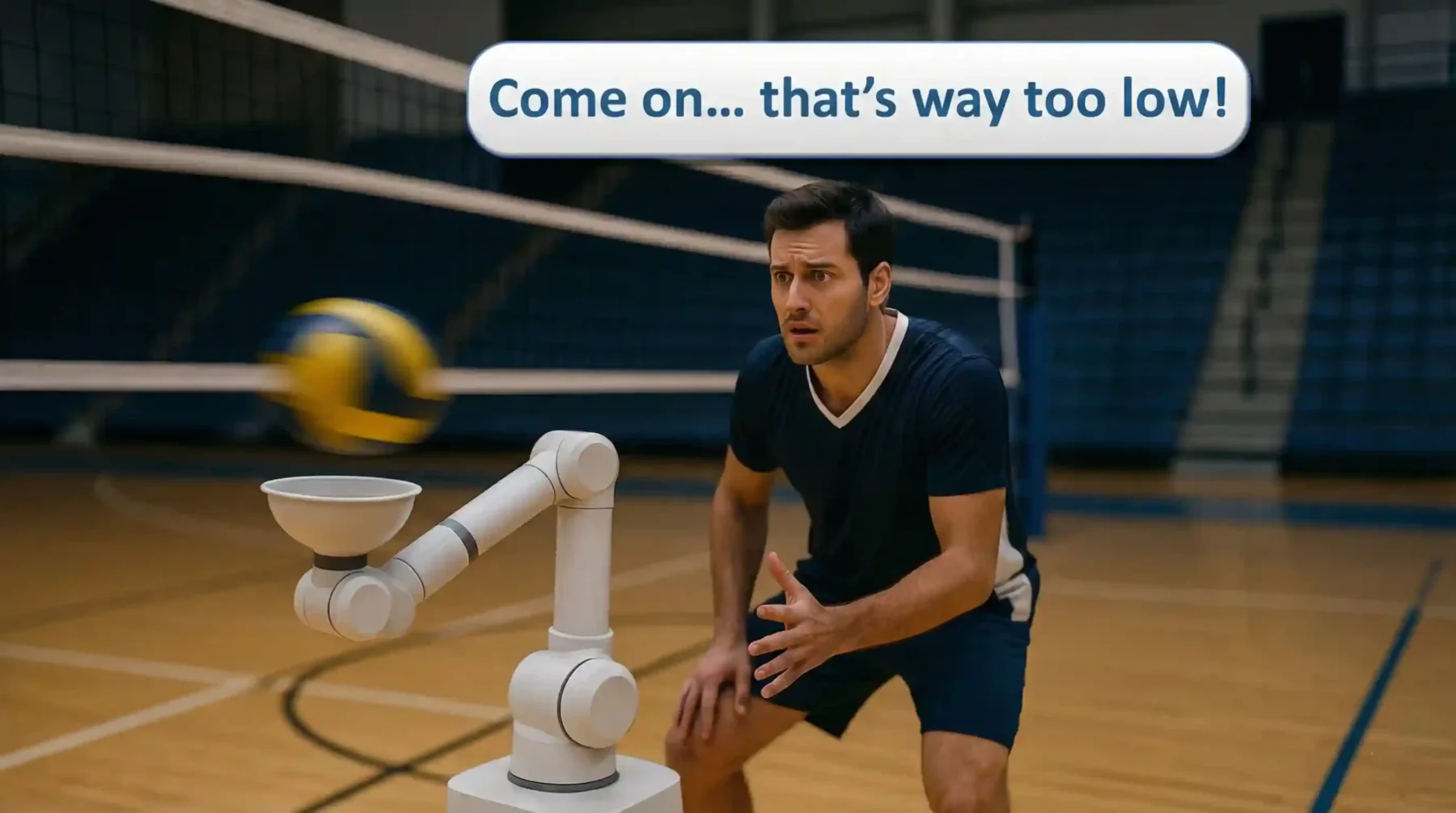

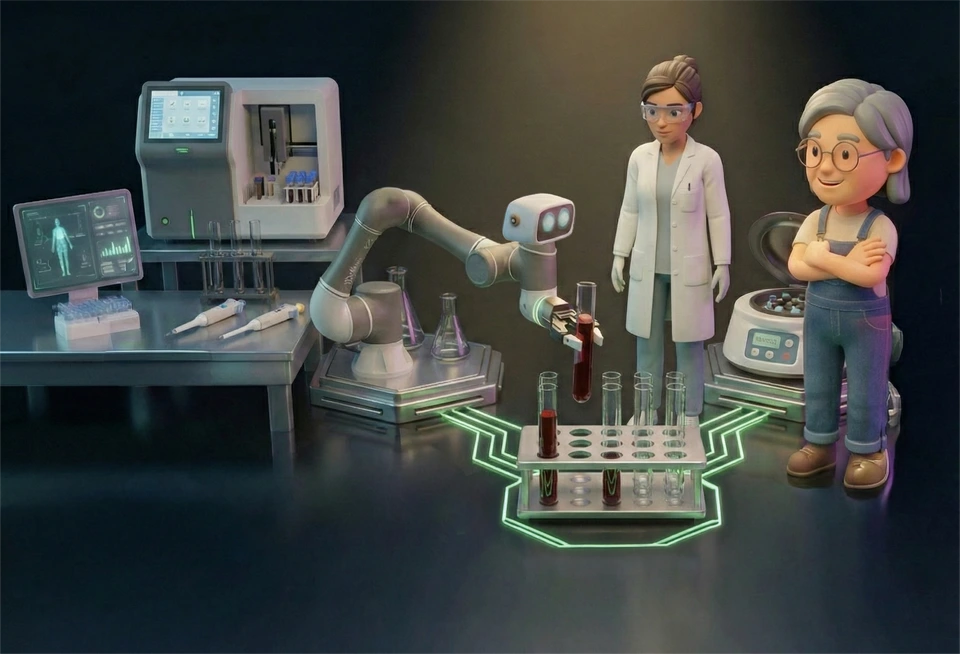

Designing and developing autonomous robots or vehicles capable of intelligent decision-making and navigation.

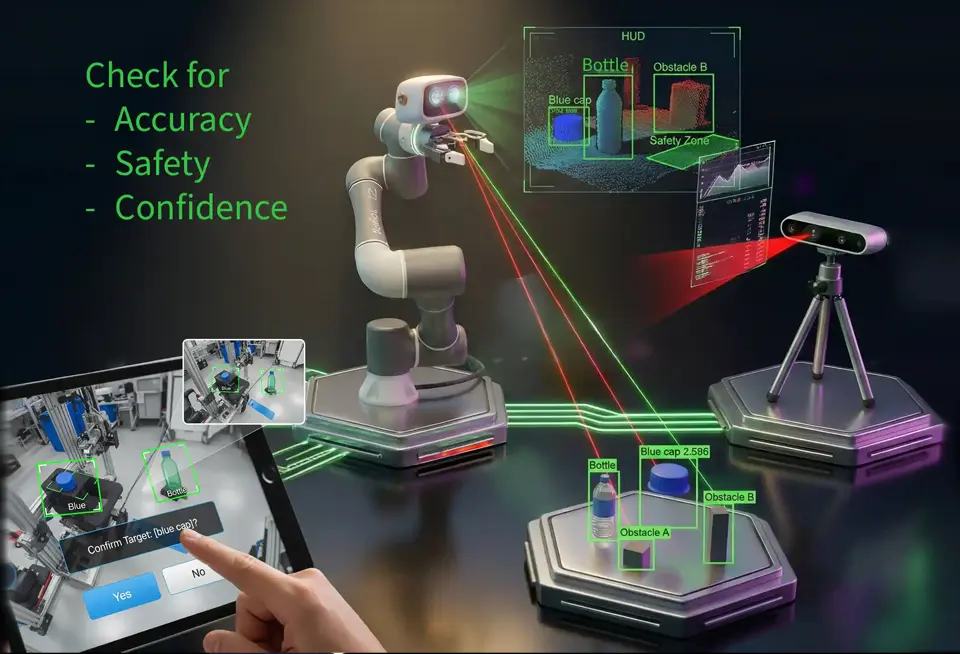

Demonstration of 3X improvement in generalization success rates on novel, unseen objects and instructions compared to conventional methods.

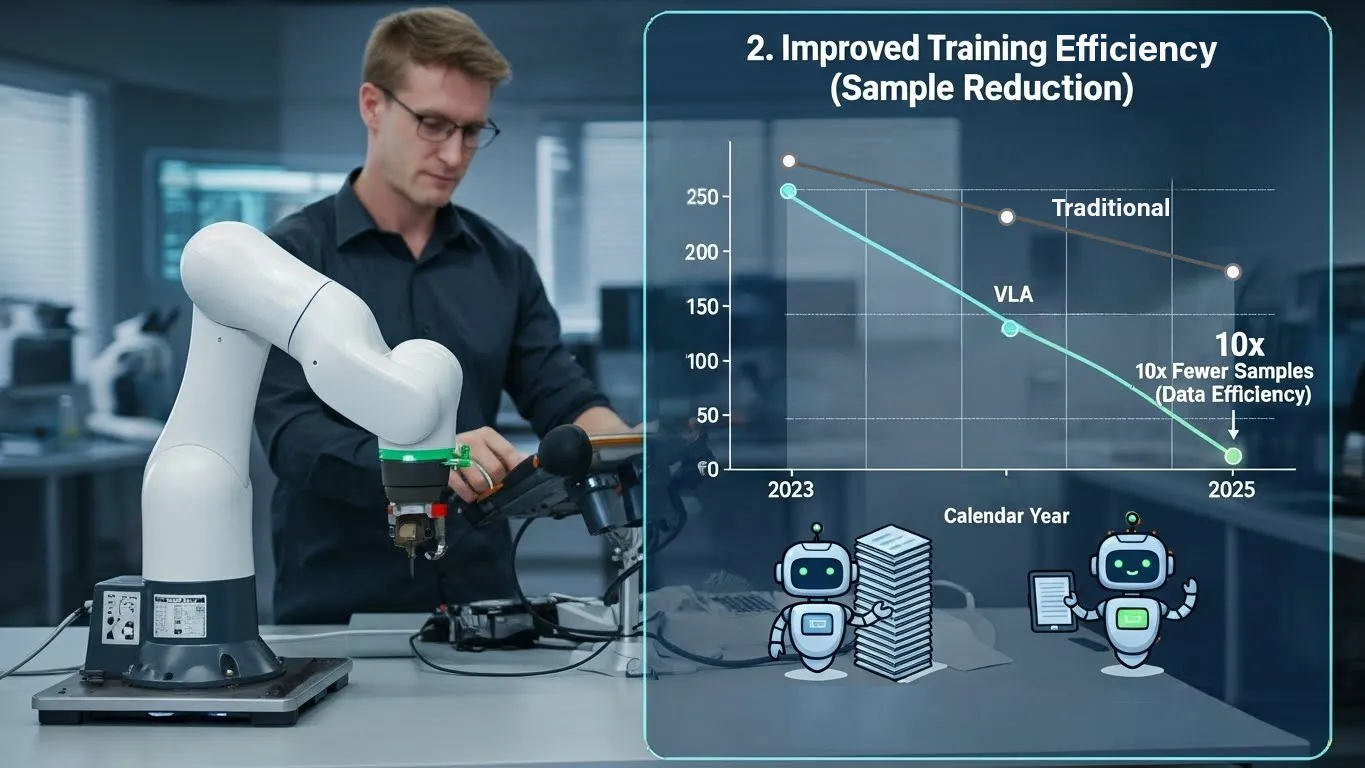

Highly data efficient for for new tasks, thanks to fine-tuning foundation models, reducing human demonstration requirements by 90% in some applications.

Exceptional success rate across broad suites of common household and industrial manipulation task.

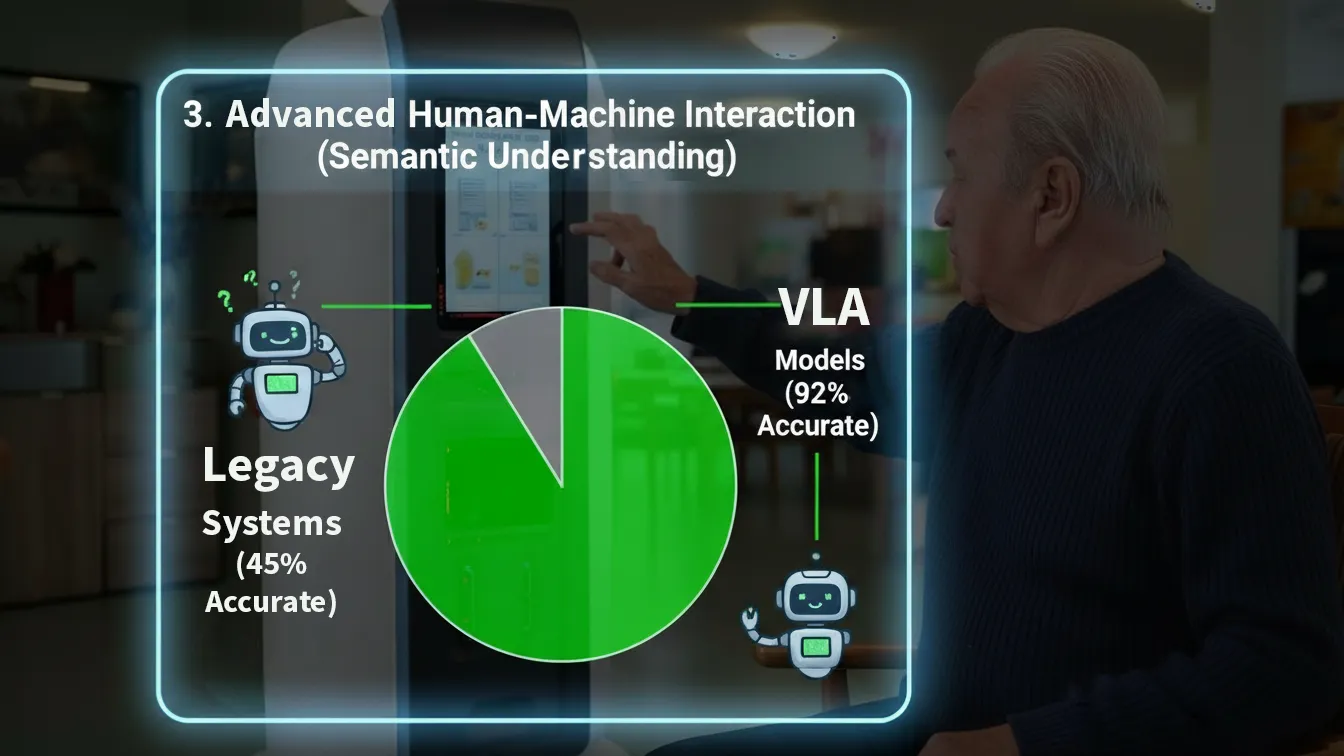

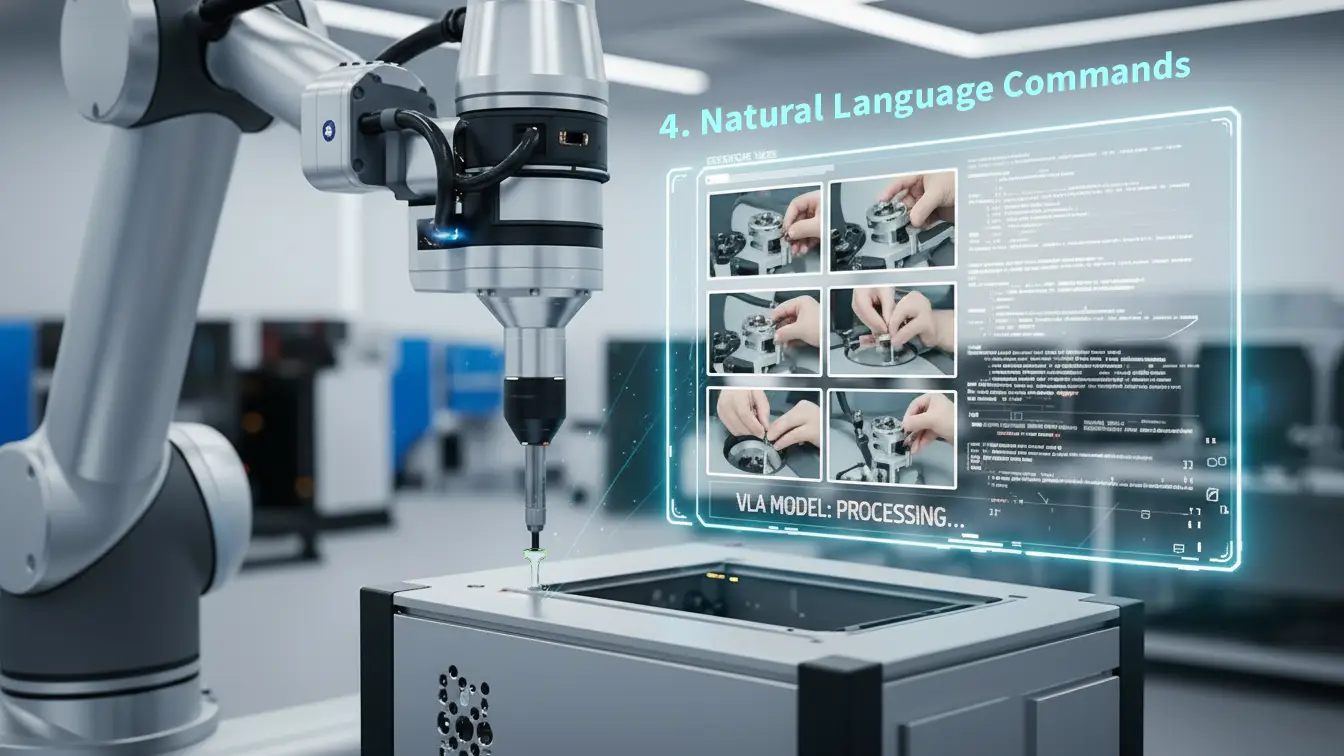

Vision Language Action (VLA) models adapt to new objects and instructions, instantly.

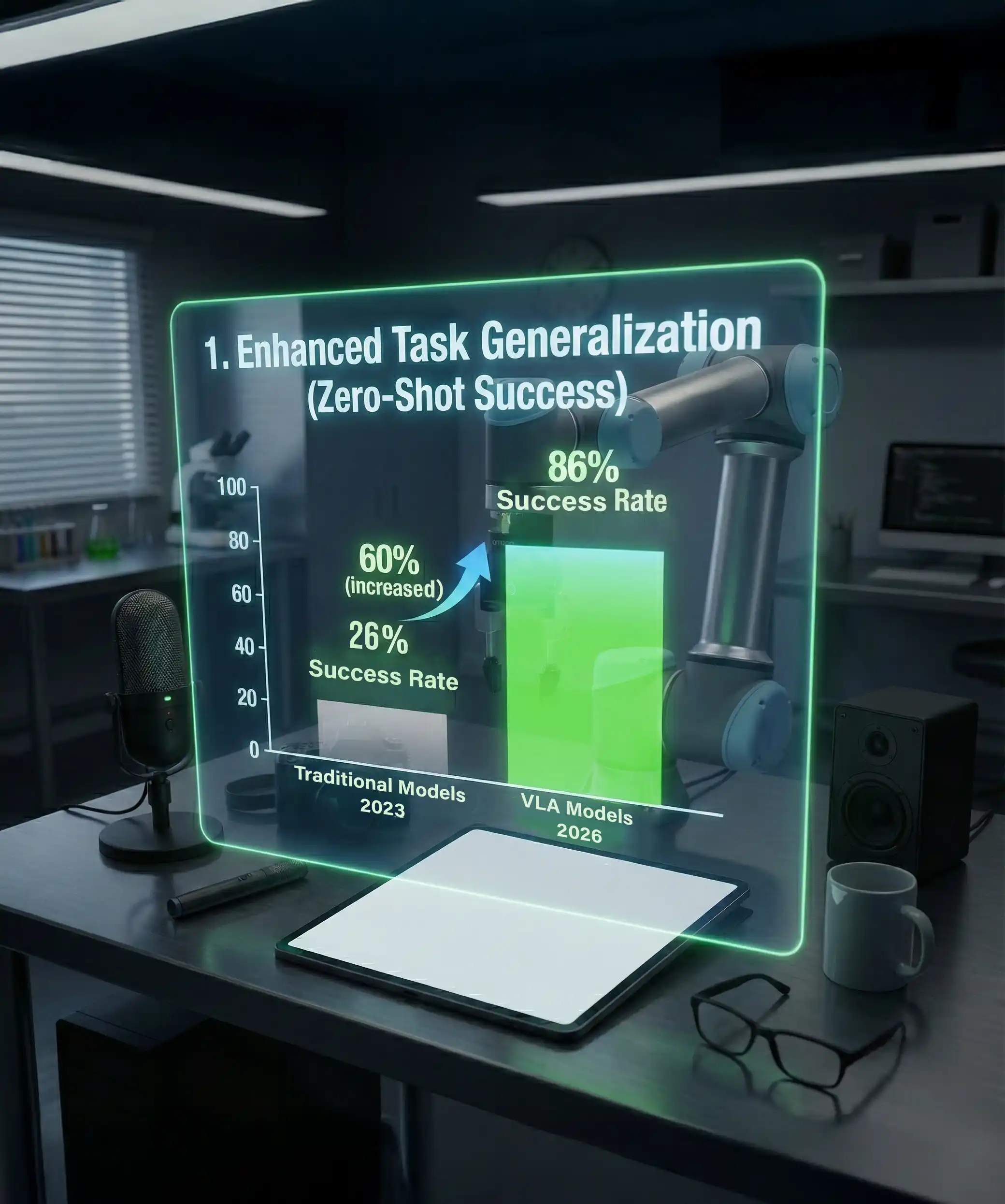

Traditional Models vs advanced VLA Models --> a substantial 60% improvement with the VLA Models achieving an 86% Success Rate, a major technological advancement over the 26% Success Rate of traditional models.

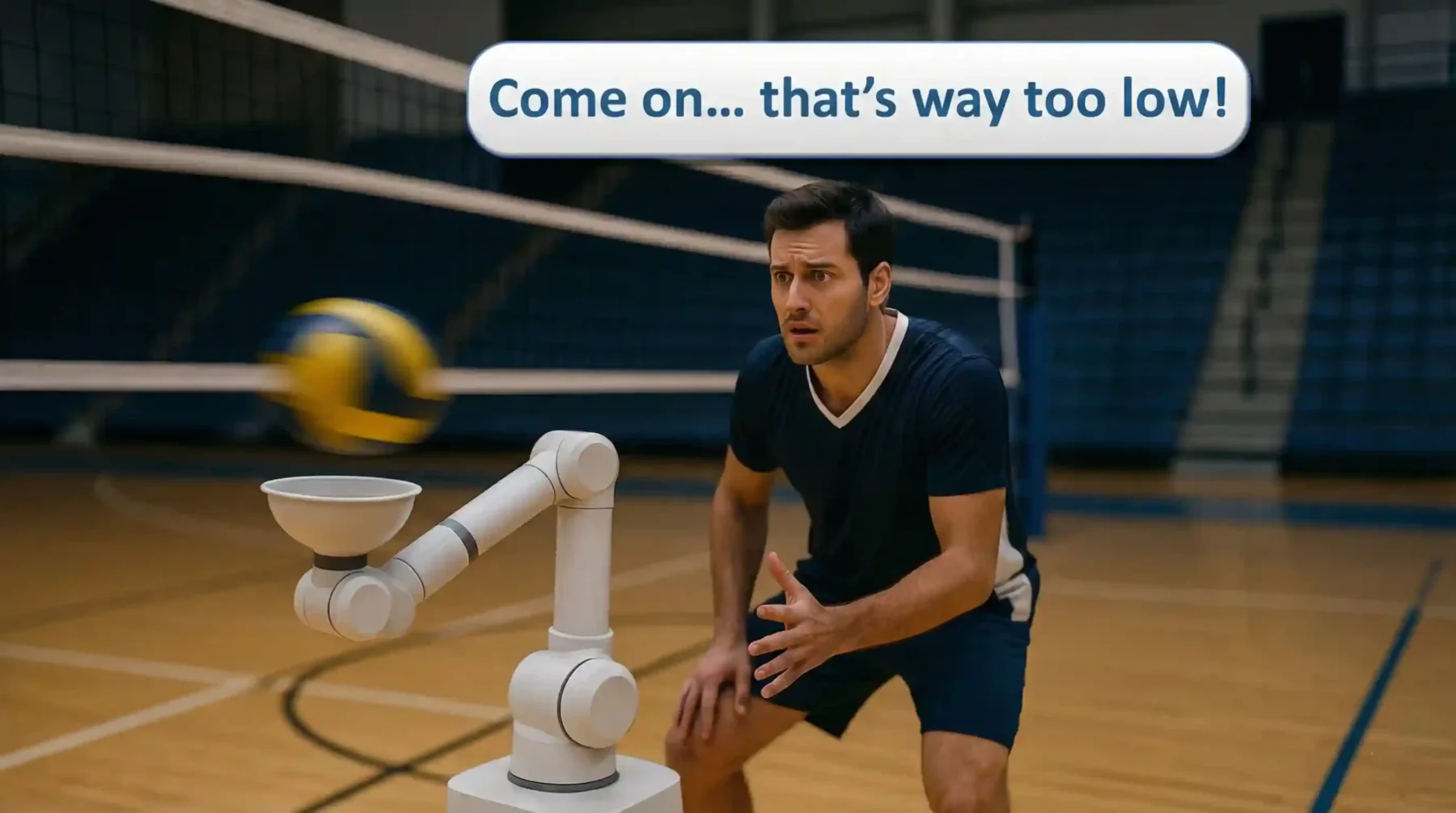

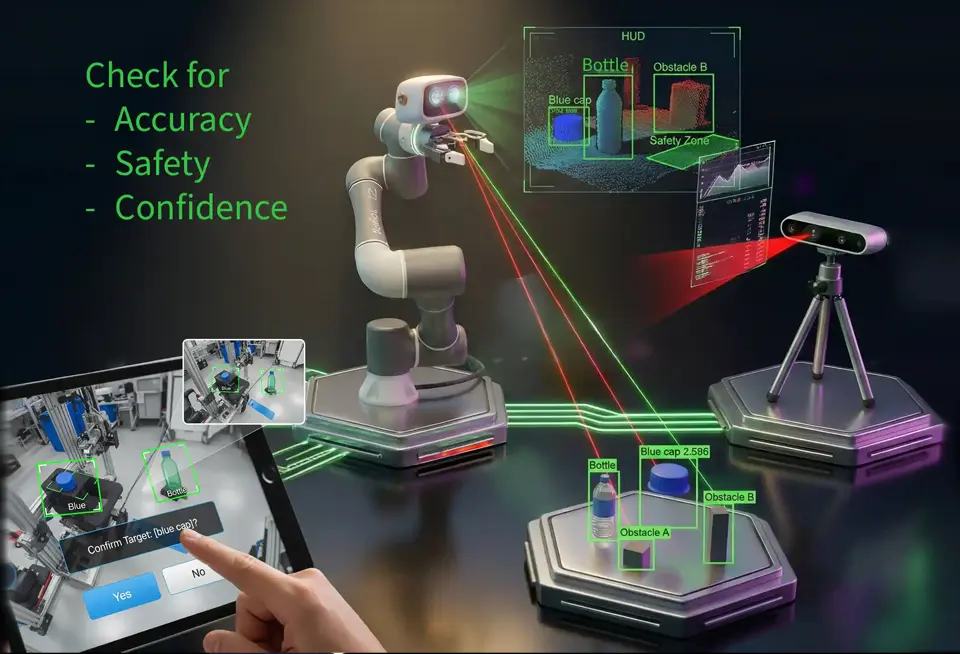

Shifting from rigid machines to common sense; no complex code, just simply assign the machine a to-do task; it connects observations with what you said/typed to figure out how to do the job on its own.

2967 Dundas St W, Toronto, ON M6P 1Z2, Canada

Copyright © 2026 - AFRoM Automation All Rights Reserved.

You cannot copy content of this page.

Javascript not detected. Javascript required for this site to function. Please enable it in your browser settings and refresh this page.