Enabling Next-gen Automation with Multimodal Cognitive Perception

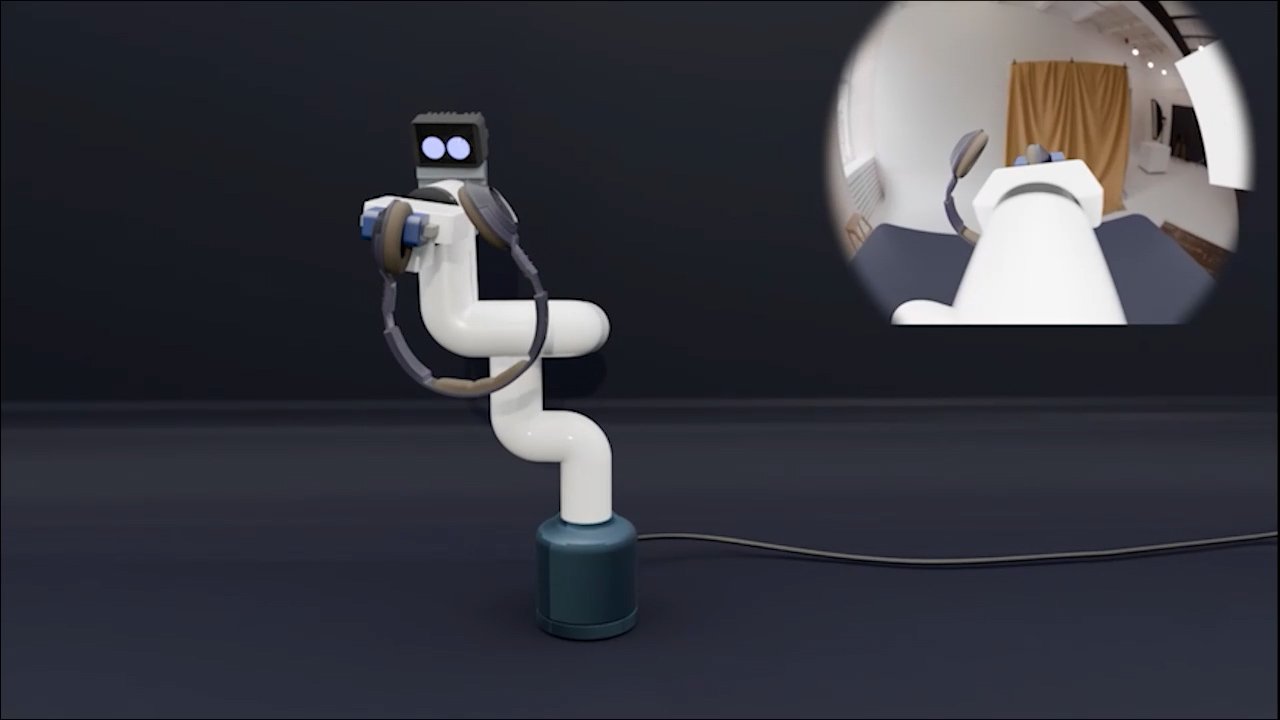

we offer an upgraded myCobot 280 powered by Raspberry Pi and NVIDIA Jetson Orin, featuring a highly capable 6-DOF collaborative robotic arm designed for innovative learning and development.

Secure Early-Bird DealTOR: Tele-Operate → Replicate Enables users to control the robot remotely while it records precise motion and position data. Once the task is demonstrated, the robot autonomously reproduces the same movements with consistency and accuracy. This mode is ideal for tasks that require human intuition during setup, while still benefiting from repeatable, automated execution afterward.

LGR: Local Guide → Replicate allows users to physically move the robot’s arms, joints, and links to demonstrate a task firsthand. During this process, the robot records precise motion data and learns the exact trajectory. It can then autonomously repeat the same movement with high accuracy, making it an intuitive and hands-on teaching method without the need for coding or remote control.

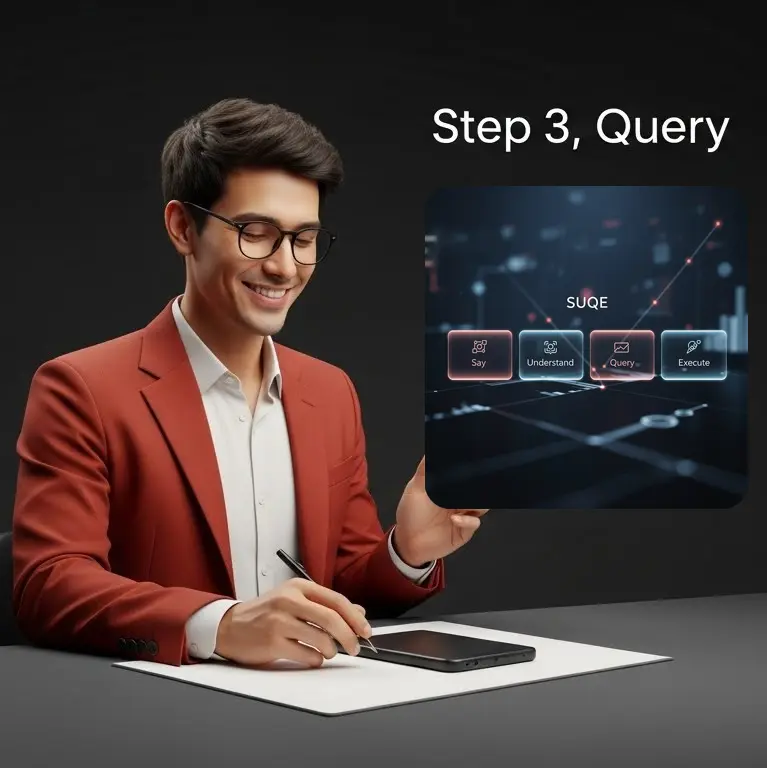

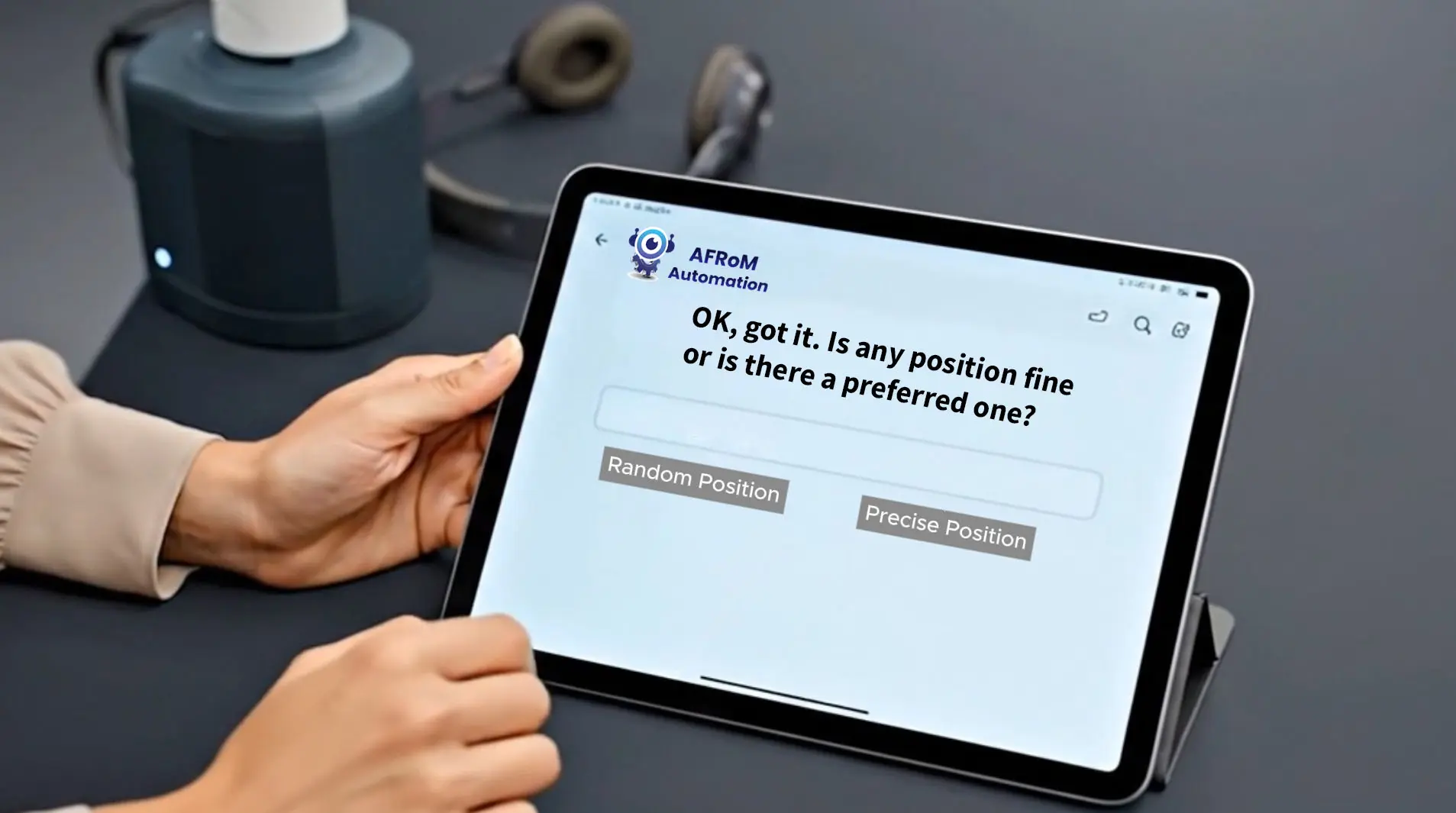

SUQE: Say → Understand → Query → Execute enables fully voice-driven interaction, allowing users to simply speak to the robot to instruct tasks. The system interprets natural language commands, confirms any uncertainties through clarifying questions, and then executes the task safely and reliably. This mode is ideal for quick, simple, and low-risk operations where hands-free communication offers maximum convenience.

LUQE: Look → Understand → Query → Execute empowers the robot to learn purely through visual observation. By simply showing the task, the system captures visual details, interprets the intent, and asks clarifying questions if needed before performing the task autonomously. This mode eliminates the need for voice commands or physical guidance, making it ideal for intuitive, non-intrusive demonstration-based learning.

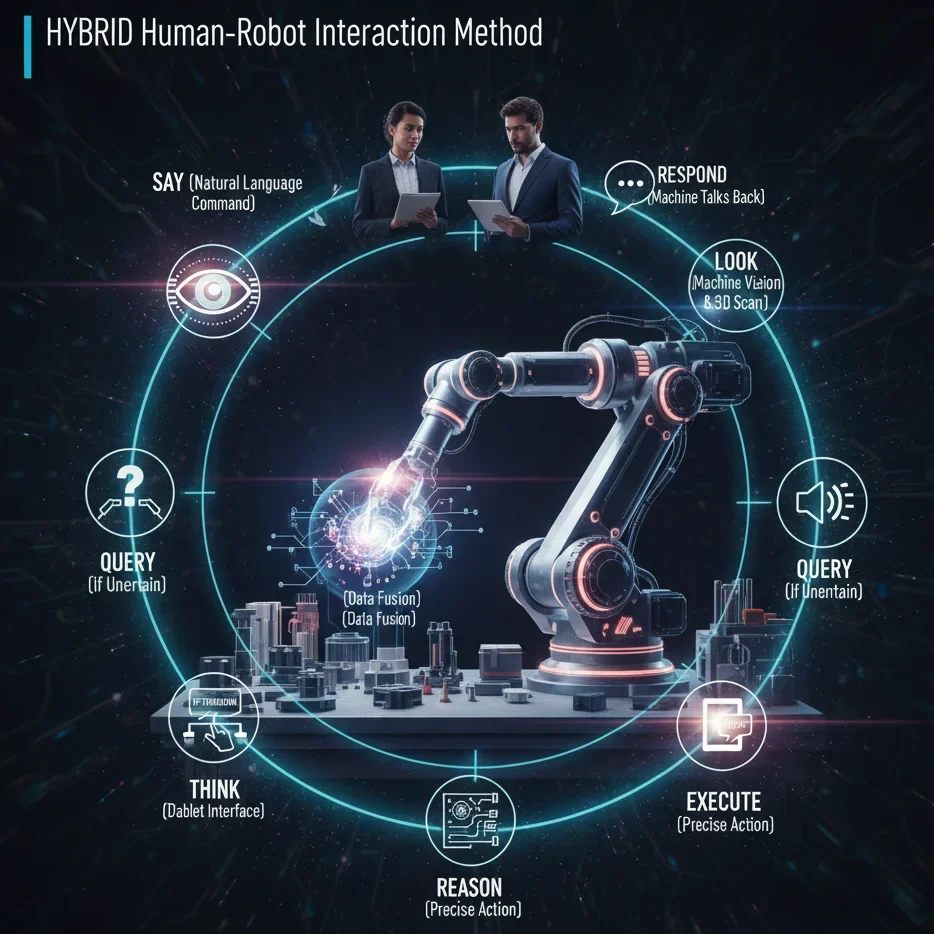

Hybrid: Guide / Tele-operate + Look & Say → UQE combines the strengths of physical guidance, tele-operation, visual learning, and voice interaction into one powerful teaching mode. Users can demonstrate tasks directly, supplement them with visual cues, and refine intentions through speech, while the robot processes the information, clarifies when needed, and executes confidently. This hybrid approach is ideal for complex, precision-critical workflows where reliability, adaptability, and human collaboration are essential.

2967 Dundas St W, Toronto, ON M6P 1Z2, Canada

Copyright © 2026 - AFRoM Automation All Rights Reserved.

You cannot copy content of this page.

Javascript not detected. Javascript required for this site to function. Please enable it in your browser settings and refresh this page.